A general guideline to migrate your existing user database to Logto

This article introduces how to utilize existing tools to migrate previous user data to Logto, in the situation where Logto has not yet provided data migration services.

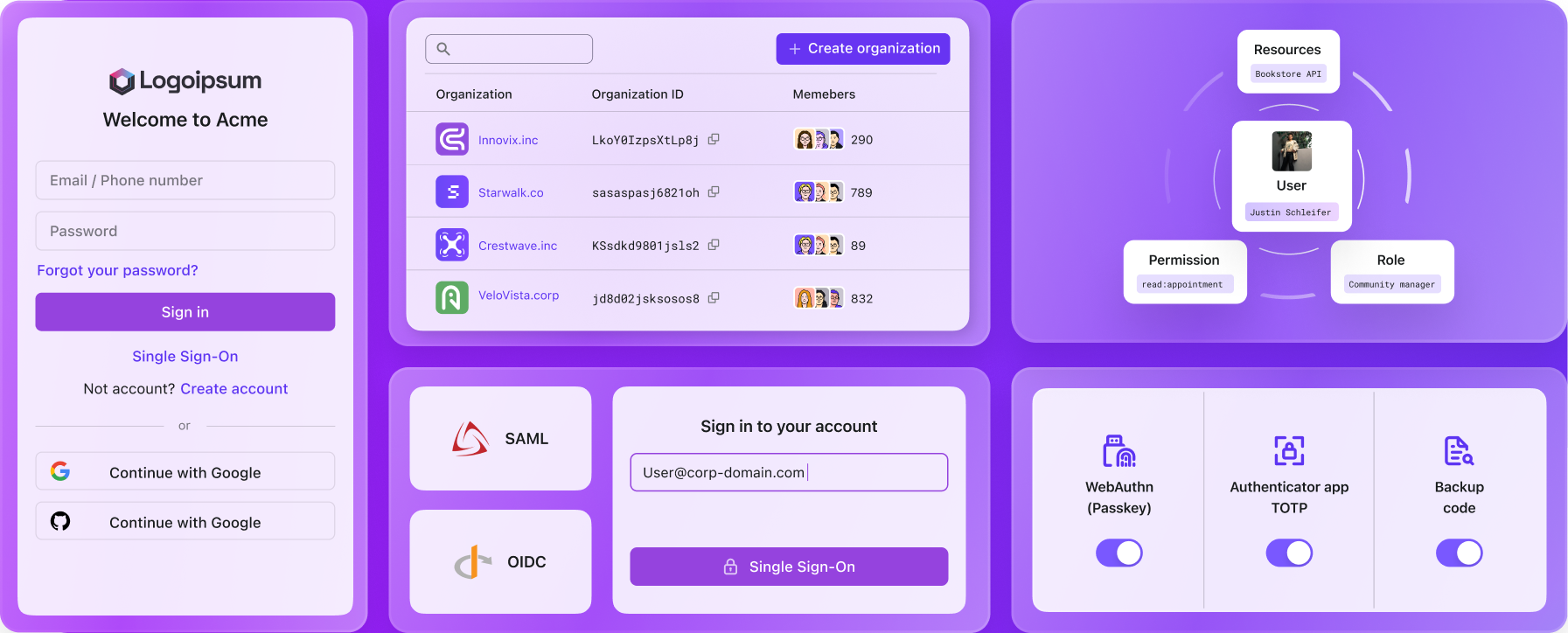

Logto does not yet have a series of tools for data migration, but we have opened up basic capabilities of Management API. This will not hinder users from completing the migration of existing user databases by writing scripts.

In view of some of the needs received from community users, and the fact that we currently do not have documentation explaining the specific steps of user database migration, we make a proper introduction in this article to help users find specific ideas and save time reading Logto code and documentation.

Step 1: Understand Logto's basic user data structure and use case

Logto uses PostgreSQL database under its hood. In addition to its various performance advantages, an important reason is that it supports custom JSON/JSONB data type and allows indexing to be built on internal values of JSON-typed data, balancing both database performance and extensibility.

For Logto's user data structure, please refer to user reference to understand all the details. Here we focus on describing some aspects where Logto may be different from other identity services.

id

This is a randomly generated internal unique identifier for users of Logto. Users are unaware of id when using Logto-based services.

Engineers familiar with databases should not find this strange. Even the most rudimentary identity systems will have an id to uniquely identify users, although their forms often differ. Some identity services may use username to uniquely identify users.

username, primaryEmail, primaryPhone

Here, username, primary email, primary phone are where Logto differs greatly from other identity systems - they can all serve as end-user perceivable unique identifiers.

In many other identity systems, username is used for identification (usernames cannot be duplicated between accounts), which is easy to be understood.

But in Logto, primary email/phone are also used to distinguish users. That is, if a user A already has the primary email [email protected], then other users B cannot add this email address as their primary email. Primary phone works similarly.

Some other identity systems allow registering multiple accounts with different usernames but binding the same email/phone, which is not allowed in Logto (emails/phones can be added to Logto’s customData). This is because primary email/phone in Logto can be used for passwordless sign-in.

identities

Logto defines this identities field as JSON-type, its type definition:

In recent years, to facilitate acquiring new users, identity systems allow users to quickly log in through some existing social accounts with a large user base, such as google / facebook, etc.

In the example below, the identities field stores social login information:

Where facebook and github are the names of the social providers, userId is the id of the user's social account used for login. The details also include some other information that the user has authorized the social provider to display, which will be added to the user's Logto user profile at specific times.

If the previous database contains the name (e.g. facebook , google) and id of the social provider used by the user (see userId in previous example), then the Logto user can log in directly with the same social account.

customData

This field can store any user related information, such as emails/phones mentioned above that cannot be used for passwordless sign-in (may be used to receive notifications or for other business related functions), etc.

Other fields are relatively easy to understand (except for passwordEncrypted and passwordEncryptionMethod which will be explained later), please read the documentation yourself.

Step 2: Writing database migration scripts

For large-scale database migration, writing migration scripts is the most common approach. We will provide a simple example to help understand how to write migration scripts to meet different needs.

It should be noted that when writing migration scripts, we skip the process of retrieving the original data, because there are many ways to obtain data, such as exporting from the database to files and then reading the files, or retrieving through APIs. These are not the focus of the migration script, so we will not discuss them in detail here.

When you see tenant_id in the migration script, you may find it strange. Logto is based on a multi-tenant architecture. For open source Logto (Logto OSS) users, you can just set the tenant_id of the user to default.

For self-hosted Logto OSS users, the database connection is easy to obtain. However, for Logto cloud users, due to security reasons, we currently cannot provide database connection permissions to users. Users need to refer to the API Docs and use the User related APIs to migrate users. We understand that this method is not suitable for large-scale user data migration, but can still handle migrating a limited number of users at this stage.

Step 3: Hashed password migration challenge and potential workaround

In our previous blog, we talked about some measures to prevent password attacks. One thing identity infra providers can do is not store passwords in plaintext but save hashed passwords.

Another blog post explained password hashes, where we stated that hash values are irreversible.

The second blog post also compared the evolution of some hashing algorithms. Logto itself uses the Argon2i algorithm mentioned in the article and does not support other hash algorithms for now. This means that password hashes of old user databases using other hashing algorithms cannot be directly migrated to Logto's database.

Even if Logto supports other commonly used hash algorithms in addition to Argon2i, it would still be difficult to directly migrate old data due to the flexibility of salt when applying hashing algorithms.

In addition to supporting other hashing algorithms in the future, Logto is also likely to provide custom salt calculation methods to adapt to various situations.

Before that, you can use Logto's sign-in experience configuration to allow users to sign in through other ways (such as email + verification code) and fill in a new password (which will use Argon2i hashing algorithm) before entering the app. Then the new password can be used to sign in later.

It should be noted that if the original user data only supports logging in with a password, the workaround mentioned above will not work for this scenario. This is because the previously mentioned workaround actually resolves the password hash incompatibility issue by using alternative sign-in methods and leveraging the "required information completion" mechanism in Logto's end-user flow.

So if only password login is supported in the original user data, the workaround cannot solve this situation, since there are no alternative login options available.

The workaround mentioned here does not really solve the hashed password migration problem, but provides an alternative solution from the Logto product perspective to avoid hindering users from logging into your product.

Step 4: Gradual switch to Logto and status monitoring

After completing the above steps, end users can already log in and use your service through Logto.

Since the service is usually not cut off during the migration, it is possible that the user data synchronized to Logto is not up-to-date. When such uncommon cases are detected, synchronization from the old database to Logto needs to be performed.

After a longer period of time (or other defined metrics may applied) without the occurrence of inconsistent data, the old database can be completely abandoned.

Conclusion

In the post, we introduced the steps an ideal database migration would go through.

If you come into problems not mentioned above, do no hesitate to join our community or contact us for help. The problems you meet may also be encountered by others, and will become issues we need to consider when designing migration tools in the future.