MCP server vs Tools vs Agent skill

MCP servers, tool-use, and agent skills: understanding the layers of modern AI development

AI-native development accelerates, and more engineers are integrating tools like Claude, Cursor, and VS Code AI extensions into their workflow. But confusion is common:

- What exactly is an MCP server?

- How do MCP tools differ from the server?

- When we say tool use, what does it mean?

- What is a Claude Code skill, and how does it fit in?

- When should you use each one?

- How do these layers work together for real engineering tasks?

This article gives a clear, practical explanation of the three concepts, their boundaries, and how they shape the next generation of software development.

MCP: Three components, one protocol

MCP (Model Context Protocol), created by Anthropic, is a standardized way for AI models to access external resources, APIs, tools, databases, or internal systems.

There are three core elements:

- MCP server: A backend service that exposes capabilities to AI clients

- MCP tools: The specific, callable actions provided by the server

- MCP providers: Integrations like Claude Desktop, Cursor, VS Code extensions

And outside MCP, but often confused with it:

- Claude Code Skill: Claude’s built-in programming intelligence within IDEs

Understanding these layers helps you design better AI workflows.

What are tools? (General AI tool use)

Before talking about MCP tools or Claude Code skills, it helps to understand what “tools” mean in the AI world overall.

In modern LLM systems, tools are:

External operations an AI model can call to do something in the real world, beyond text generation.

They give the model agency. A tool can be almost anything:

- an API endpoint

- a database query

- a filesystem operation

- a browser action

- running code

- sending an email

- creating a user

- interacting with cloud resources

- calling an LLM function

When the model decides to call a tool, it steps outside pure language and performs an action with real effects.

Why tools exist

Tools exist because text-only models hit a ceiling very quickly.

A model can explain things, reason about a problem, or draft code, but it can’t actually touch your systems. It doesn’t know how to query your database, change a file, call an API, deploy something to the cloud, or run a long operation that spans multiple steps.

Once you give the model a set of tools, these gaps disappear. The model gains a controlled way to interact with real infrastructure, while you keep strict boundaries around what it can do and how it can do it. Tools provide structure, safety, and the execution layer that text-only models fundamentally lack.

The core idea of tool use

A tool is defined by a contract:

When the model sees this definition, it can:

- understand what the tool does

- reason about when to use it

- construct the input object

- call the tool

- incorporate the output in its next steps

Tools effectively turn an LLM into an orchestrator.

Important boundaries

Tools are often misunderstood as something more powerful than they are. They’re not a form of intelligence, and they don’t contain business rules, permissions, or workflow logic. A tool is simply a small, well-defined operation the model can call, nothing more. The larger behavior you see, like multi-step workflows or decision-making, doesn’t come from the tool itself but from the model chaining several tools together using its own reasoning.

Why tools matter in agent ecosystems

Modern agent frameworks:OpenAI Functions, Claude Tools, MCP, LangChain are all built on the same principle: an LLM only becomes genuinely useful once it can take action. Language alone isn’t enough; the model needs a way to reach into the real world.

Tools are the layer that makes this possible. They connect the model’s reasoning with the actual capabilities of your system, and they do so in a controlled, permissioned environment. With this bridge in place, AI stopped being a passive text generator and became something closer to an operator that can fetch data, modify files, coordinate workflows, or trigger backend processes.

As a result, tool use has quietly become the backbone of almost every serious AI application today, from IDE assistants and backend automation to DevOps copilots, IAM automation, data agents, and enterprise workflow engines. MCP sits on top of this evolution by offering a consistent protocol for exposing these tools, standardizing how models discover and interact with real system capabilities.

MCP server: the backend capability layer for AI

Think of an MCP server as a backend service that exposes carefully scoped capabilities to AI assistants. Instead of giving a model unrestricted access to your systems, you wrap the parts you want it to use: internal APIs, authentication logic, database operations, business workflows, or permission checks.

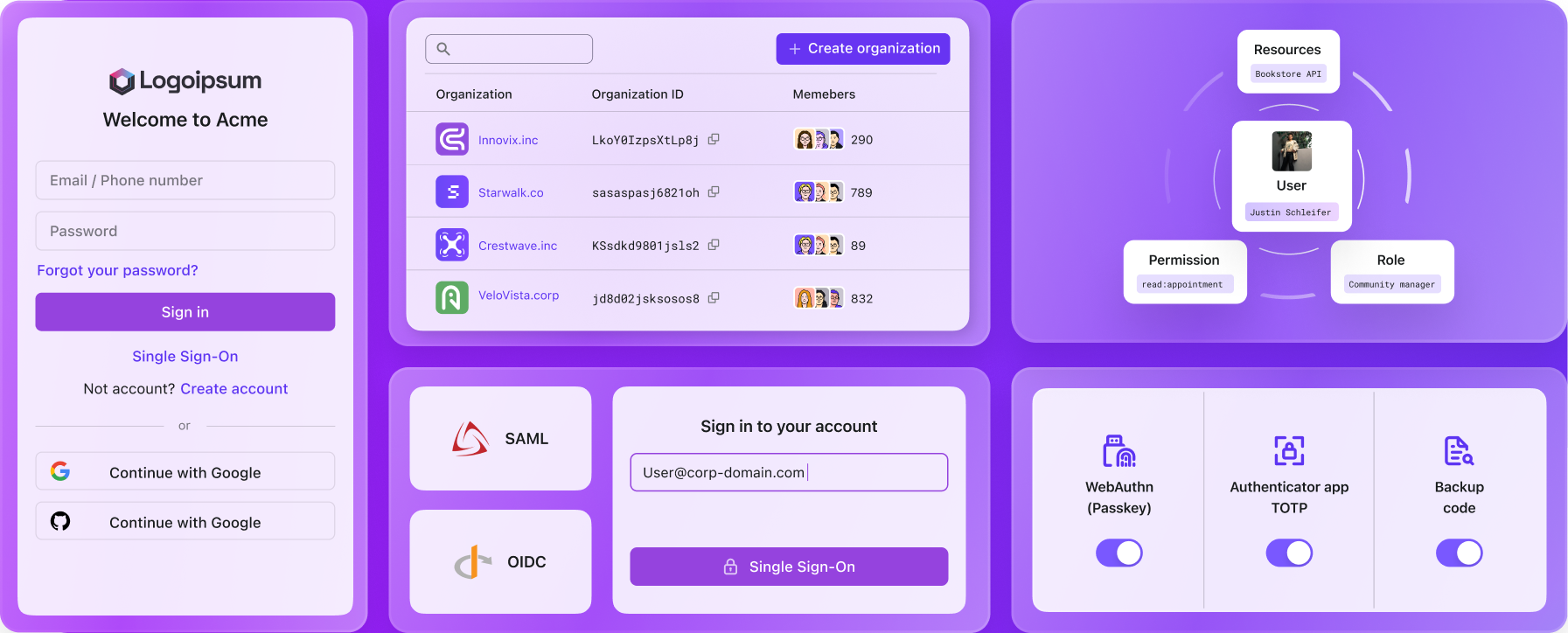

In practice, an IAM platform like Logto might surface actions such as:

- listing or creating organizations

- generating org or M2M tokens

- inspecting a user’s access to an API

- reading application configuration

- producing secure backend snippets based on tenant settings

The value of an MCP server comes from the structure around these capabilities.

Rather than scattering integrations across different clients, everything sits behind one well-defined interface. This brings a few practical advantages:

- multiple AI clients can reuse the same backend logic

- security and permission boundaries live in one place

- you maintain a consistent contract for every agent

- enterprise systems keep tighter control over what AI can actually do

For this reason, MCP servers fit naturally into environments where correctness and access control matter—identity systems, payments, CRM, DevOps, and internal admin tooling.

MCP tools: atomic actions exposed to AI models

In the previous section, we talked about tools, tools are not servers; they are the individual actions inside a server.

Example tools:

create_organizationinvite_memberlist_applicationsgenerate_backend_snippetinspect_user_access

Tools are:

- small

- stateless

- easy for LLMs to call

- the building blocks of larger workflows

They do not maintain context or provide security policy by themselves. They are simply the “functions” exposed via MCP.

Claude code skill: The IDE intelligence layer

Claude Code Skills are not part of MCP.

They are Claude’s built-in abilities inside environments like:

- Cursor

- Claude Desktop

- VS Code / JetBrains editors

Examples of Code Skills:

- reading entire repositories

- applying multi-file edits

- generating new modules

- debugging logs

- refactoring code

- running tasks across files

What Claude Code can not do: Claude Code skills cannot manage organization permissions, access tenant data, execute secure business workflows, call your internal infrastructure directly, or modify protected production resources. These limitations are exactly why MCP exists.

Visualizing the relationship

Put simply:

- Claude Code Skill = code reasoning

- MCP Tools = actions

- MCP Server = capabilities + security + business context

They complement, not replace, each other.

Why MCP servers are becoming the entry point for enterprise AI

MCP servers address one of the fundamental problems in AI development: a model has no understanding of your internal systems unless you expose those systems in a structured and secure way. Without that layer, the model is limited to guessing, pattern-matching, or generating instructions it can’t actually execute.

An MCP server provides that missing bridge. It gives you a controlled place to expose internal functionality, on your terms, while keeping security boundaries intact. With this setup, the model gains access to real capabilities without bypassing your infrastructure or permission model. In practice, an MCP server lets you:

- surface backend functions safely

- apply authentication and authorization to every action

- keep a single, consistent interface for multiple AI agents

- support multi-step workflows without embedding logic into prompts

- allow the model to act with real power, but always within defined limits

This is also why IAM platforms like Logto align so naturally with MCP.

Concepts such as permissions, tokens, organizations, scopes, and M2M flows translate directly into the security guarantees an MCP server is designed to enforce, turning identity into the backbone of safe AI execution.

When should you use MCP server vs tools vs Claude Code skill?

| Scenario | Best Choice |

|---|---|

| Multi-file code editing | Claude Code Skill |

| Execute a simple action | MCP Tools |

| Expose secure enterprise workflows | MCP Server |

| Integrate internal backend capabilities | MCP Server |

| Build an AI-powered product feature | MCP Server + Tools |

| Let developers interact with infrastructure via IDE | Claude Code Skill + MCP Server |

| Perform identity / access control operations | MCP Server |

The future: The three layers working together

The strongest AI system will combine all three:

- Claude Code for code reasoning and refactoring

- MCP Tools for atomic operations

- MCP Server for secure backend functionality

Together they allow:

- IDE intelligence

- Executable tools

- Enterprise-level capabilities

This stack turns AI from “a helpful chatbot” into:

- an IAM operator

- a DevOps assistant

- a backend automation engine

- a workflow executor

And for platforms like Logto, it becomes the natural distribution layer for identity and authorization.

Final summary

MCP Server

A backend service exposing secure system capabilities to AI.

MCP Tools

Atomic actions provided by the server. Think of them as callable functions.

Claude Code Skill

IDE intelligence that understands code and performs sophisticated editing—but cannot access secure systems by itself.

Together: AI understands your code (Claude Code), executes precise actions (Tools), and performs secure workflows using your internal systems (MCP Server).

This is the foundation of the next generation of AI-driven development.