Postmortem: Bad Gateway

Incident report for the Logto service outage on 2024-01-11 due to domain renewal failure.

Summary

On 2024-01-11, Logto services experienced a service outage with many 502 Bad Gateway errors.

- Time started: Around 2024-01-11 15:28 UTC

- Time resolved: Around 2024-01-12 00:49 UTC

- Duration: Around 9 hours

- Affected services: Logto auth service, Logto Cloud service

- Impact level: Critical

- Root cause:

logto.appdomain expired, and the renewal failed to complete.

Timeline

- 2024-01-11 15:28 UTC User reports 502 Bad Gateway error when accessing Logto auth service.

- 2024-01-11 15:42 UTC More users report the same issue.

- 2024-01-11 15:50 UTC Our team members start investigating the issue, and make phone calls to other team members. Since it was late at night for some team members, normal phone calls were not strong enough to wake them up.

- 2024-01-12 23:54 UTC We find out that the cloud service is sending requests to the auth service, but the request failed due to ERR_TLS_CERT_ALTNAME_INVALID error.

- 2024-01-12 00:36 UTC We purged the DNS cache to see if it helps. It didn't.

- 2024-01-12 00:38 UTC We re-issued the TLS certificates to see if it helps. It didn't.

- 2024-01-12 00:45 UTC We noticed that the

logto.appdomain may have expired. We checked the domain registrar and found out it didn't renew successfully and the domain was expired. - 2024-01-12 00:49 UTC The domain renewal completed. Services are getting back to normal gradually.

Incident analysis

What happened?

Our domains are typically renewed automatically through our domain registrar. However, in this instance, the renewal process failed due to a potential misconfiguration. Consequently, the logto.app domain expired, and the DNS records were updated to point to the registrar's parking page.

As of now, the auth service remains operational, but most requests cannot reach it. The exception is the Logto admin tenant, which binds to the auth.logto.io domain and remains unaffected by the expiration.

In addition to the auth service, we also have a Cloud service that orchestrates the Logto tenants and serves the Logto Cloud Console (a frontend app).

When a user operates the Cloud Console, the app doesn't directly call the auth service; instead, it calls the Cloud service for all management operations.

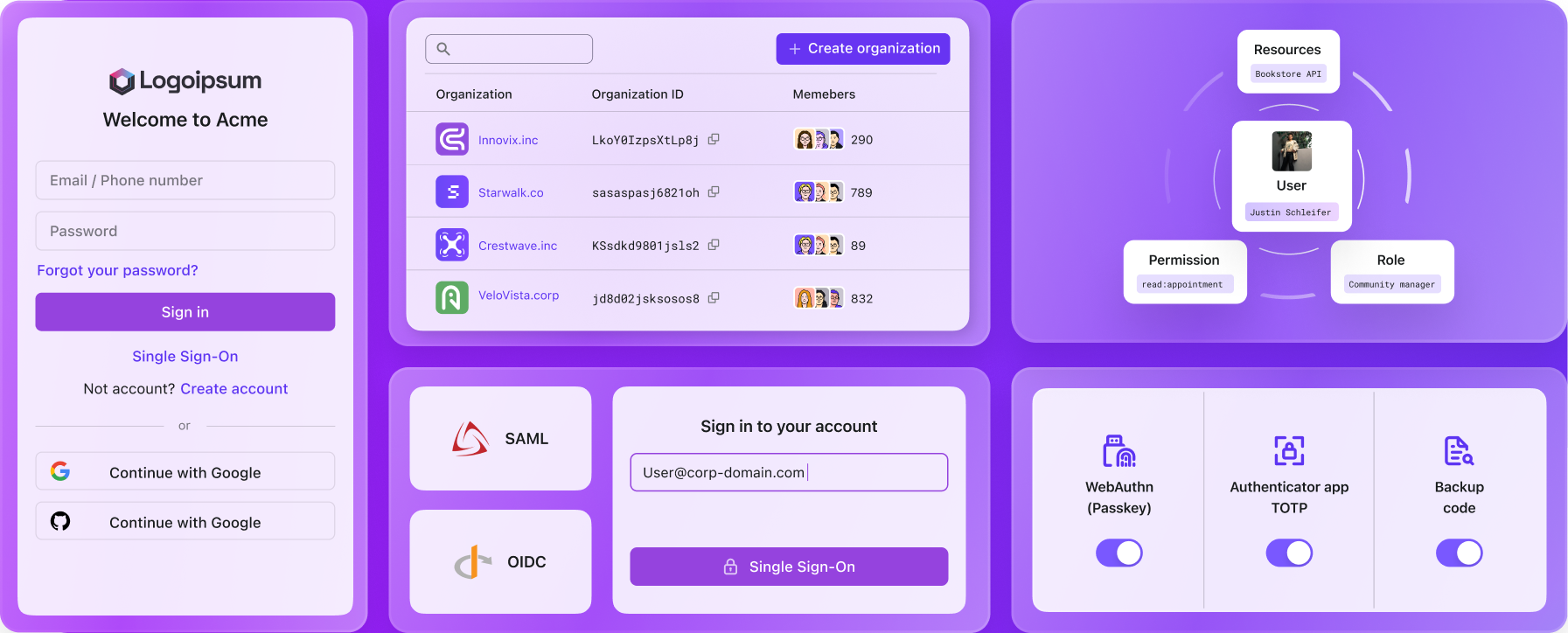

To align with the Logto Management API, we designed a "Management API proxy" endpoint to delegate requests to the auth service. The entire flow looks like this:

Since the *.logto.app domain has a certificate mismatch issue, the Cloud service (Node.js) rejects the request and throws an error.

Normally, request errors are caught to prevent the entire service from crashing. However, since the error was propagated from the proxy module, the existing error handling logic was unable to catch it, leading to a service crash.

Although every Logto service has at least three replicas, all replicas crashed easily due to the error occurring in almost every request from the Cloud Console. It takes time for the auto-recovery mechanism to kick in, causing the service to be unavailable for a while.

This is the reason why users are seeing 502 Bad Gateway errors (all replicas crashed). Once the Cloud service is up, new and retrying Cloud Console requests come in, and the crash loop continues.

When Cloud service is down, it also impacts the auth service for certain endpoints, mostly /api/.well-known/sign-in-exp. This endpoint is used to fetch the sign-in experience configuration which includes connector information that needs to be fetched from the Cloud service.

Resolution

- Manually renew the domain.

Lesson learned

- Always set up monitoring for domain expiration or purchase for a longer duration.

- Be aware that timezone differences can cause a delay in incident response.

- Ensure monitoring covers all domains.

- Exercise caution when interacting with throwable modules, ensuring errors can be caught and handled properly.

Corrective and preventative measures

- ✅ Add monthly monitoring for domain expiration whether auto-renewal is enabled.

- ✅ Add monitoring for

logto.app. - ✅ Update the Cloud service error handling logic to catch and handle proxy errors properly.

- ✅ Implement stronger alerts that can wake up the team for incidents before having an all-timezone-covered SRE team.