Understanding AI agent skills: Why authentication security matters

Skills turn AI into active operators, but managing secure, scoped credentials across many tools makes authentication one of the hardest challenges.

The problem: AI that can only talk

Traditional large language models (LLMs) like ChatGPT or Claude are incredibly powerful at understanding and generating text. But on their own, they can't:

- Access real-time data from the web

- Send emails or notifications

- Save information to databases

- Generate images or audio

- Interact with external APIs

AI agent skills solve this limitation by giving AI agents the tools they need to take action in the real world.

What are AI agent skills?

Imagine having a personal assistant that can manage your emails, update spreadsheets, send messages across different platforms, and coordinate multiple tools, all without constant supervision.

That’s what AI agents powered by skills make possible.

Skills are pre-built integrations that teach an AI agent how to interact with specific services.

In simple terms, a skill is a structured description that tells the agent how to use an API and what actions it can perform.

You can think of skills like apps on your phone, each one unlocks a specific capability and extends what the agent can do.

- Communication: Slack, Discord, email platforms

- Development: GitHub, GitLab, CI/CD tools

- Data: Google Sheets, databases, analytics

- Creative: Image generation, video editing

- Productivity: Project management, documentation

Instead of spending weeks writing custom code for each integration, you simply enable a skill and provide the necessary credentials. The AI agent instantly knows how to use that service—complete with error handling and best practices built in.

Think of an AI agent as a highly intelligent employee. The LLM (Claude, GPT, etc.) is the employee's brain—capable of reasoning, planning, and making decisions. Skills are the tools and abilities that let this employee actually get work done.

| Component | Analogy | Function |

|---|---|---|

| LLM | Employee's brain | Reasoning, planning, decision-making |

| Skills | Tools & abilities | Execute actions, call APIs, process data |

| Prompt | Task assignment | Define what needs to be done |

Without skills: An AI that can only discuss tasks

With skills: An AI that can discuss, plan, and execute tasks

AI agent skills vs. Function calling vs. MCP

Understanding the ecosystem of AI tool integration:

| Concept | Description | Scope |

|---|---|---|

| Function Calling | Native LLM capability to invoke predefined functions | Single API interaction |

| MCP (Model Context Protocol) | Anthropic's standardized protocol for tool integration | Interoperability standard |

| AI Agent Skills | Pre-packaged, production-ready capability modules | Complete integration solution |

AI Agent Skills = Function Calling + Configuration + Authentication + Best Practices

Skills abstract away the complexity of:

- API authentication and token management

- Error handling and retries

- Rate limiting and quotas

- Response parsing and validation

Benefits of using AI agent skills

Plug-and-Play Integration

No need to write integration code from scratch. Reference a skill, provide credentials, and start using it immediately.

Secure Secret Management

API keys and tokens are managed through secure environment variables (${{ secrets.API_KEY }}), never exposed in code.

Composability

Combine multiple skills to create sophisticated workflows. A news digest agent might use:

- hackernews → fetch stories

- elevenlabs → generate audio

- notion → store content

- zeptomail → send notifications

Version Control

Lock skills to specific versions for stability, or always use the latest for new features.

Community-Driven

Open-source skill repositories allow anyone to contribute new integrations and improvements.

The authentication challenge

Here’s the critical question: how does an AI agent prove it has permission to access external services?

The answer is authentication credentials, digital keys that grant access to your most valuable systems and data.

These credentials can take many forms: API keys, user credentials, OAuth tokens, and other delegated access mechanisms. Each represents a different trust model and security boundary.

The challenge is that modern AI agents don’t just call one API. They orchestrate dozens of services, tools, and integrations across environments. As the number of connected systems grows, so does the complexity of managing authentication safely.

What was once a simple secret now becomes a distributed security problem:

how credentials are issued, scoped, rotated, stored, and revoked across automated workflows.

This is where most agent architectures begin to break down, not because of intelligence, but because of identity and access control.

Types of credentials: understand what you are actually protecting

API keys: Static shared secrets

Definition:

API keys are static bearer tokens used to authenticate requests. Possession of the key alone is sufficient to gain access.

Technical characteristics:

- Long-lived or non-expiring by default

- Typically scoped at the account or project level

- No intrinsic identity binding or session context

- Cannot distinguish between human, service, or automation usage

Security properties:

- No built-in rotation or expiration enforcement

- No native support for fine-grained permission isolation

- Any leak results in full compromise until manual rotation

Threat model:

High blast radius. API keys are often leaked via logs, client-side code, or CI/CD misconfiguration.

Common usage:

Simple service integrations, internal tooling, legacy APIs, early-stage developer platforms.

OAuth tokens: Delegated and scoped authorization

Definition:

OAuth tokens are short-lived credentials issued by an authorization server, representing delegated access on behalf of a user or application.

Technical characteristics:

- Time-bound (minutes to days)

- Scope-based authorization model

- Backed by standardized OAuth 2.0 / OIDC flows

- Can be revoked independently of user credentials

Security properties:

- Reduced blast radius through scope limitation

- Supports token rotation and refresh mechanisms

- Designed for third-party and cross-service access

Threat model:

Moderate risk. Compromise impact is limited by scope and lifetime, but still sensitive in high-privilege environments.

Common usage:

SaaS integrations, enterprise SSO, user-facing APIs, third-party app access (GitHub, Google Workspace, Slack).

Personal Access Tokens (PATs): User-scoped programmatic credentials

Definition:

Personal Access Tokens are long-lived tokens issued to a specific user identity, intended for automation and non-interactive workflows.

Technical characteristics:

- Bound to a user account, not an application

- Often manually created and manually revoked

- Typically support granular permission scopes

- Frequently used in CLI tools and CI/CD pipelines

Security properties:

- More controllable than API keys, but more powerful than OAuth access tokens

- Risk increases when used in headless or shared environments

- Often lack automatic rotation or expiration unless explicitly configured

Threat model:

Medium to high risk. A leaked PAT effectively impersonates a real user within its granted scope.

Common usage:

GitHub/GitLab automation, CI pipelines, developer tooling, infrastructure scripts.

The four pillars of secure authentication

Least privilege: give minimum access

Credentials should follow the principle of least privilege and only grant the minimum permissions required to perform a task.

For example, a social media posting bot should not have full administrative access that allows it to delete content, view analytics, or manage billing. Instead, it should be issued a narrowly scoped credential that only permits content publishing, with clear limits such as a daily quota and an expiration window. When credentials are constrained this way, even if they are leaked, the potential damage is strictly limited.

Secure storage: Never hardcode

| What NOT to do | What TO do |

|---|---|

| Hardcode credentials in source code | Use environment variables |

| Commit them to Git repositories | Implement secret management systems (HashiCorp Vault, AWS Secrets Manager) |

| Share via email or Slack | Encrypt credentials at rest |

| Store in plaintext files | Use temporary credentials when possible |

Regular rotation: Change the locks

Regularly replace credentials even if you don't think they're compromised.

Recommended frequency:

- API Keys (critical): Every 30-90 days

- OAuth Tokens: Automatic via refresh tokens

- After security incident: Immediately

Why it matters?Limits the window of opportunity for stolen credentials and forces review of which credentials are still needed.

Continuous monitoring: Stay alert

When monitoring credential usage, it’s important to watch for abnormal patterns that signal potential abuse. Warning signs include sudden spikes in failed authentication attempts, unusual access locations, unexpected surges in API usage, or attempts to escalate permissions. For example, normal behavior might look like 1,000 API calls per day from a known office IP during business hours, while suspicious activity could involve tens of thousands of requests within a few hours from an unfamiliar country in the middle of the night.

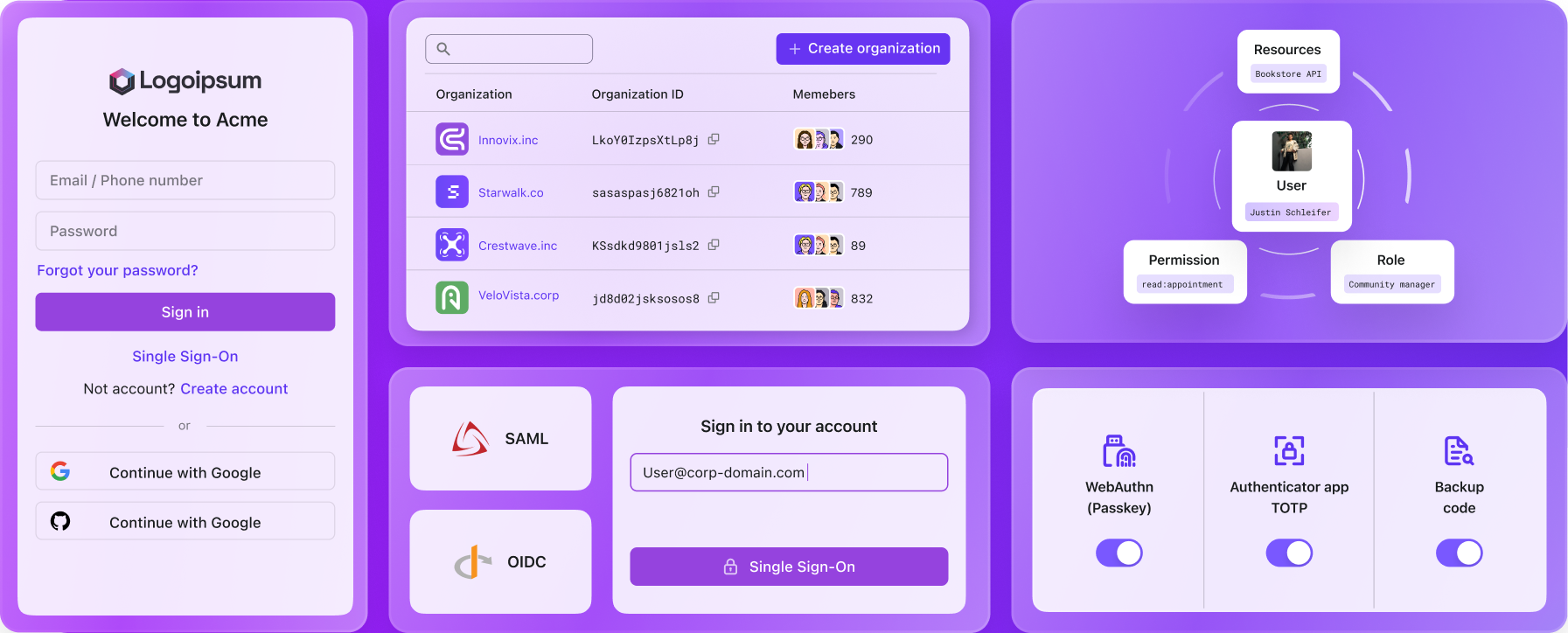

Leading authentication solutions

In the era of AI-driven systems, having tokens and API keys scattered across codebases, scripts, and environments is no longer acceptable. Secrets sprawl is not just a hygiene issue, it is a security risk.

Modern authentication platforms address this by providing secure credential storage and secret management capabilities. These built-in vaults allow sensitive tokens to be stored, encrypted, rotated, and accessed securely at runtime, rather than hard-coded or manually distributed.

Providers such as Auth0, Logto, and WorkOS offer native support for securely storing and managing credentials, making it easier to control access, reduce leakage risk, and enforce proper lifecycle management across services and agents.