AI agent auth: use cases and identity needs

2025 is the year of AI. As LLMs and agent experiences evolve, new challenges in authentication and authorization emerge. This article explores AI agent interactions, highlighting key security and authentication scenarios.

2025 is shaping up to be the year of AI. With the rapid growth of LLMs and agent experiences, we’re often asked: How do we embrace this new era? And what are the new use cases for AI agent authentication and authorization? In this article, we will explore the typical agent experience and point out the security and auth scenarios along the way.

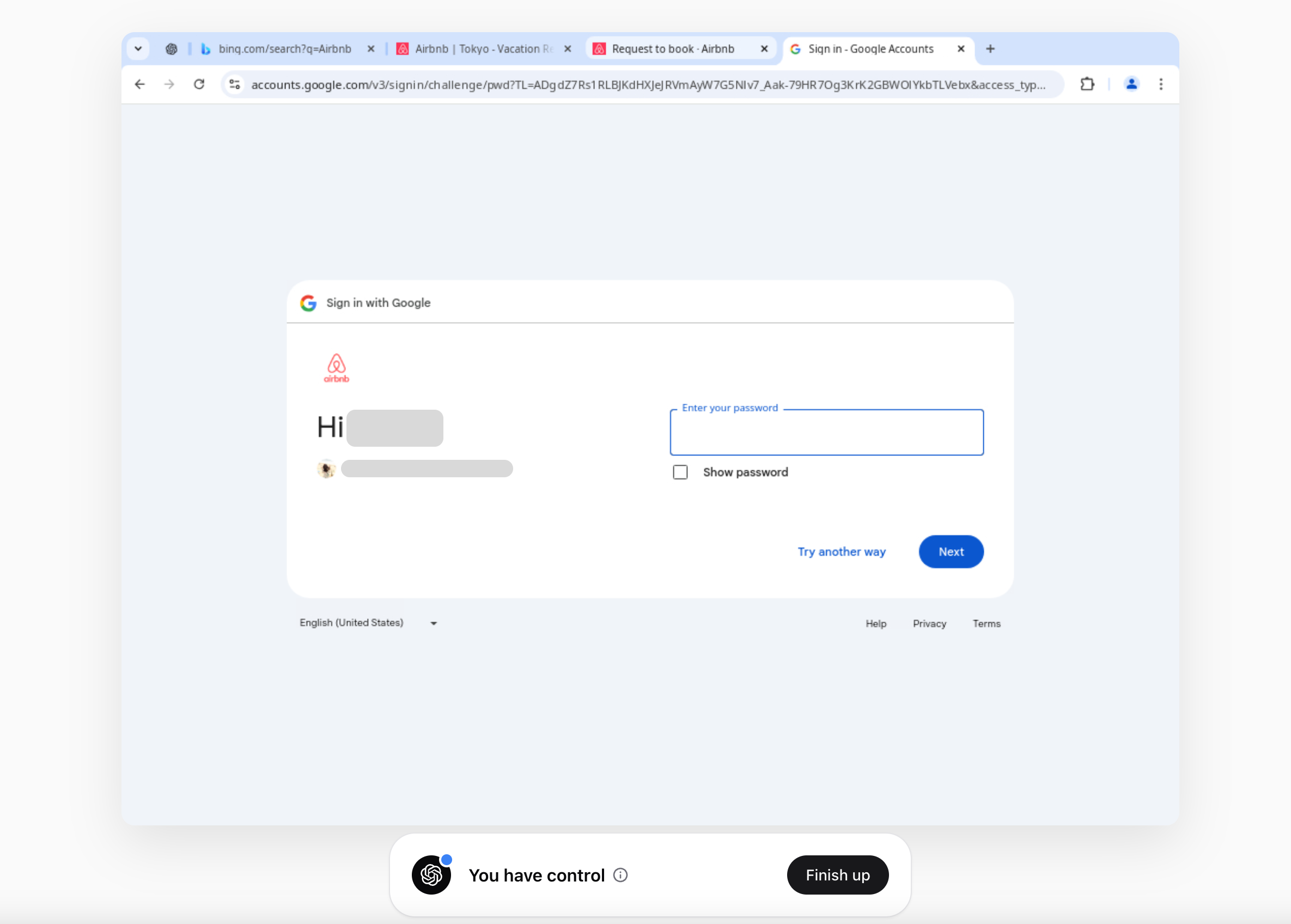

ChatGPT operator agent auth experience

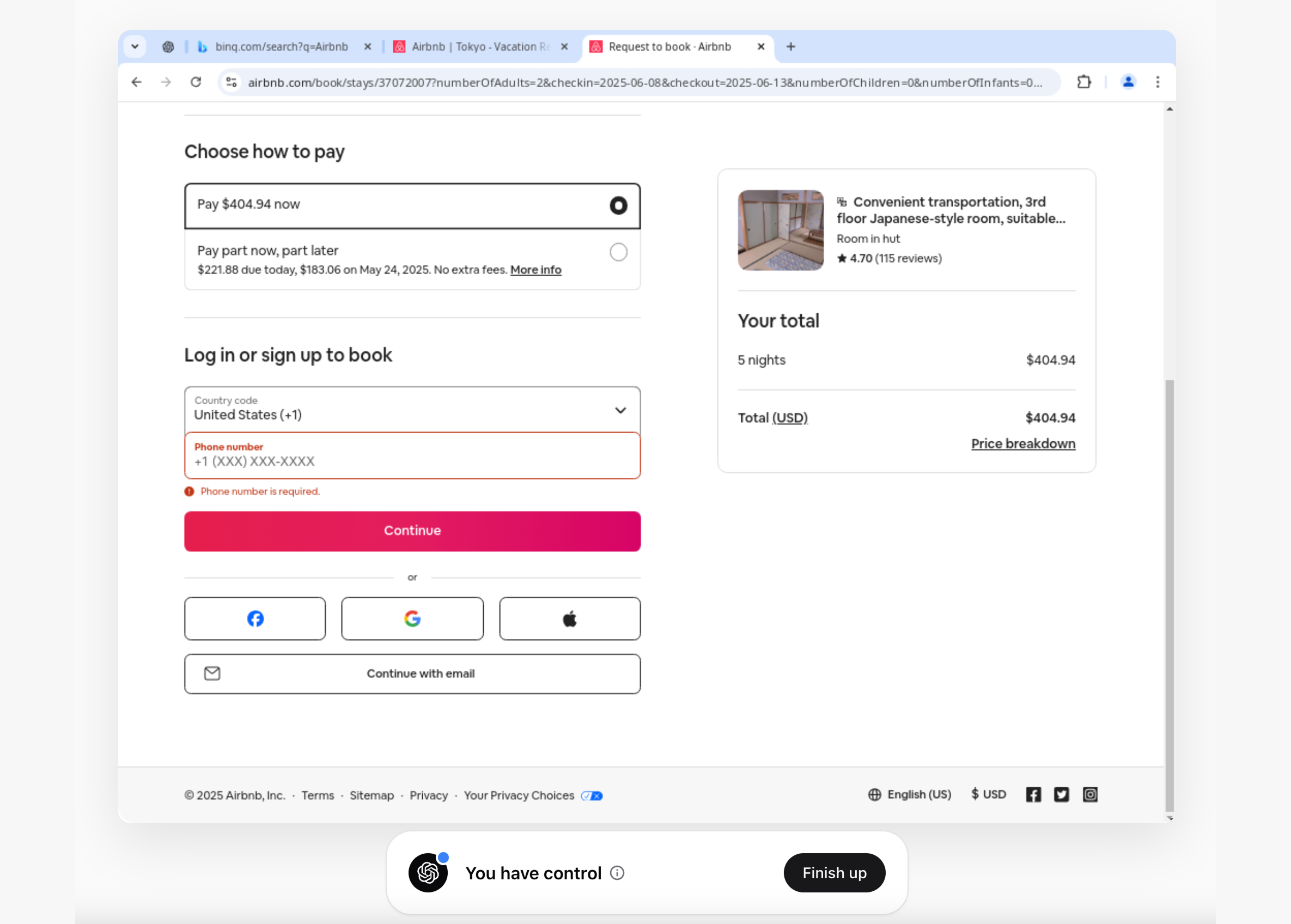

I recently purchased the ChatGPT Operator and explored a few common workflows. One example was booking a stay at Tokyo, Japan. The Operator made it incredibly easy to find a suitable room based on my prompt.

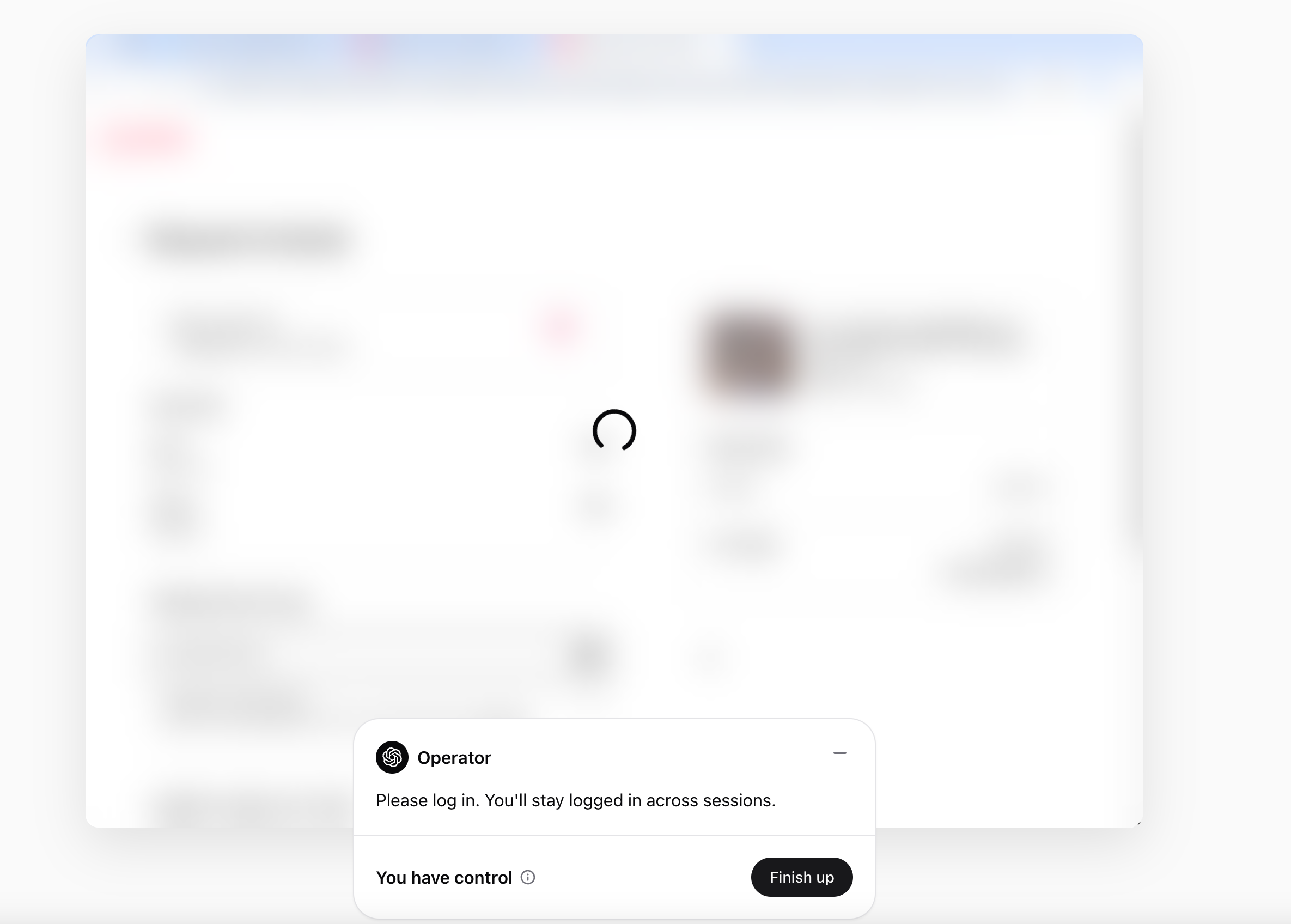

During checkout, it asked me to sign in then handing control back to me.

This experience made me uneasy. Even though I had control and the agent couldn’t log in for me, I still had to enter my email and password in the Operator’s browser. This means that if you log into your email (or any service) through the Operator, your credentials are stored in cookies.

OpenAI’s Operator states that it never stores user credentials and follows compliance standards like SOC II. However, when third-party agents interact with external services on your behalf, the security risks increase significantly.

In general, directly giving an agent your account access and credentials is a bad idea.

There’s still a lot of room for improvement. In the next section, I’ll dive into different authentication and credential management approaches, weighing their pros and cons.

Like this X Threads discussed.

How are credentials handled, and what are the security risks?

Directly give the AI agent your credentials

In this approach, the AI enters plaintext credentials (such as an email and password) on your behalf. For example, an AI agent might request your login details and input them for you.

However, this method carries security risks, as it can expose sensitive information. If implementation is necessary, it’s safer to integrate a password manager or secret management system. Additionally, restricting how long credentials are stored can help minimize the risk of leaks.

Instead of plaintext credentials, Personal Access Tokens (PATs) offer a more secure way to grant access without requiring a password or interactive sign-in. PATs are useful for CI/CD, scripts, and automated applications that need to access resources programmatically. To enhance security, it’s best to limit PAT scopes, set expiration times, and allow revocation to prevent leaks and account hijacking.

User delegation via OAuth

OAuth (Open Authorization) is a widely-used standard for delegated authorization on the web. It allows users to grant a third-party application limited access to their data on another service without sharing their login credentials.

In essence, OAuth solves the problem of secure access delegation: for example, you can authorize a travel app to read your Google Calendar without giving the app your Google password. This is achieved by having the user authenticate with the data provider (e.g. Google) and then issuing tokens to the third-party app instead of exposing the user’s credentials.

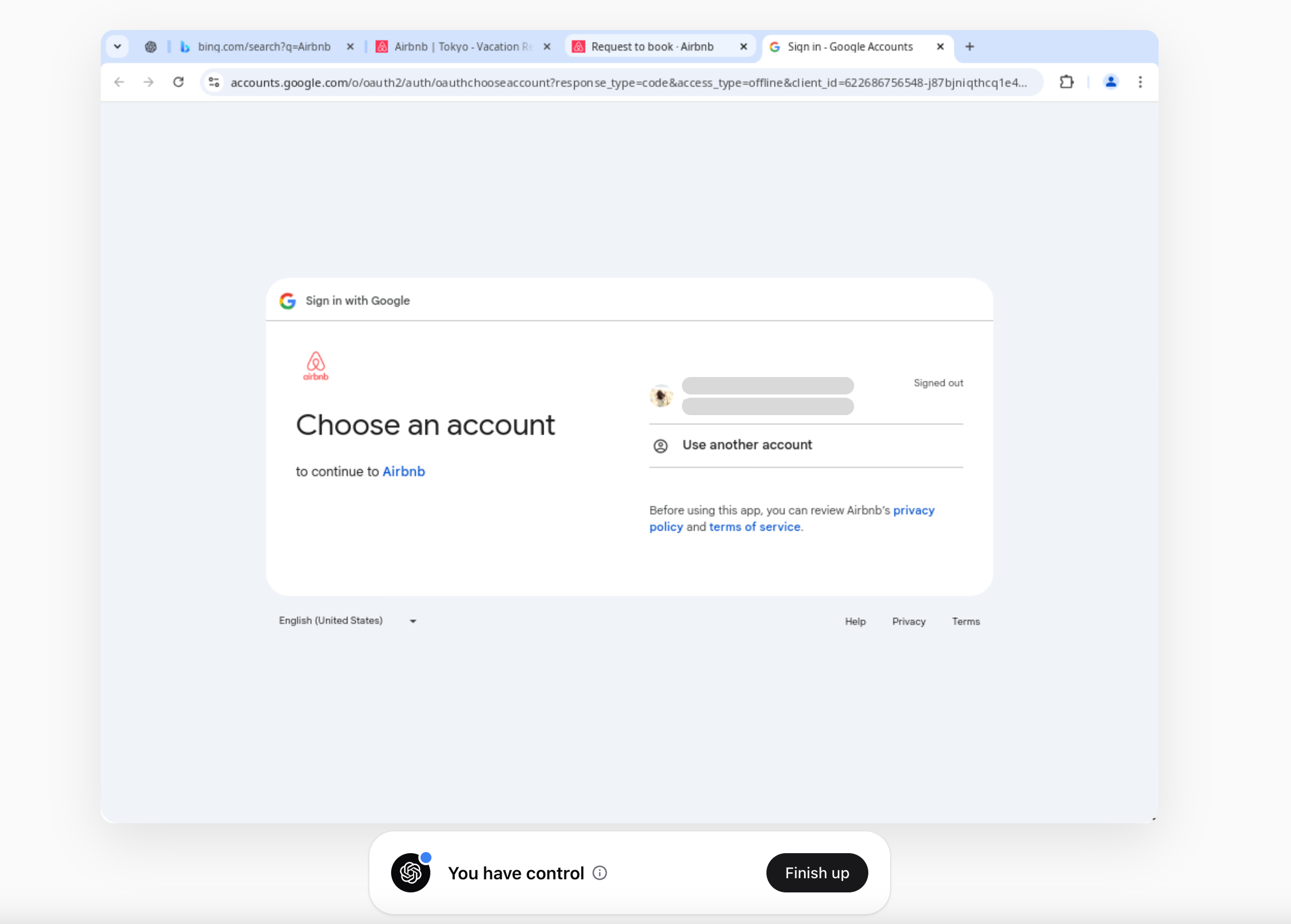

A better way to handle this scenario is to authorize ChatGPT Operator (or any other agent) to read and write on Airbnb without sharing your password or credentials. Instead of letting the Operator log in directly, you can grant access through a secure authorization process.

In my view, if OpenAI Operator wants to enhance trust and identity security, there are several ways to achieve this.

-

Move the login process outside the Operator: Handling the user sign in outside of the ChatGPT Operator. This means you might click a “Log in with [Service]” button and get redirected to the service’s secure login page to authenticate yourself, completely outside the chat or ChatGPT operator. For instance, if there were an Airbnb plugin, you would be sent to Airbnb’s website to enter your credentials and authorize ChatGPT, and then the plugin would receive a token. ChatGPT only receives a temporary access token or key, which grants limited access (e.g. “read my itinerary”), instead of ever seeing your actual password.

-

Have the user complete the consent flow before the AI agent performs any tasks. This approach is similar to how many products handle integrations, marketplaces, and connected services.

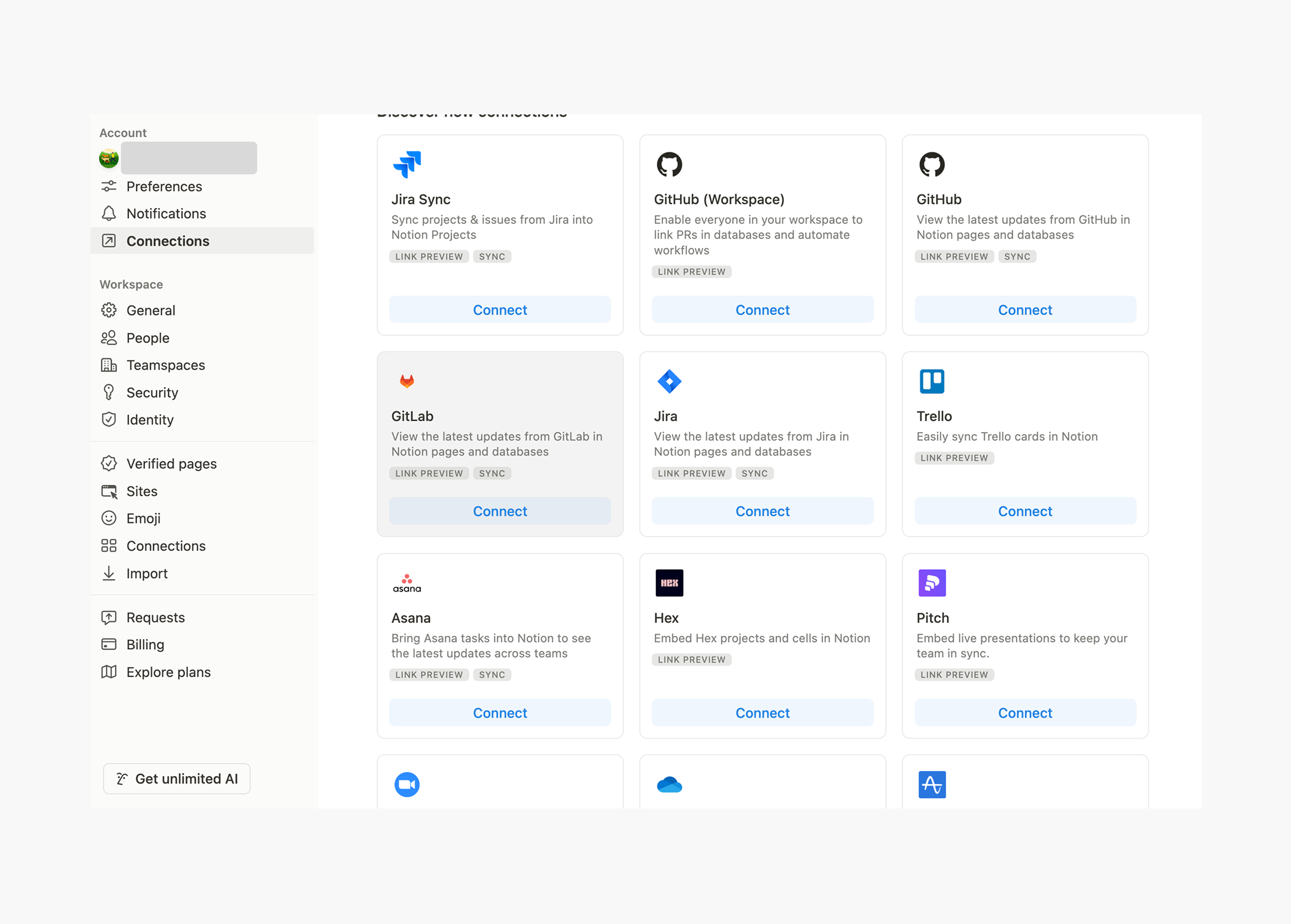

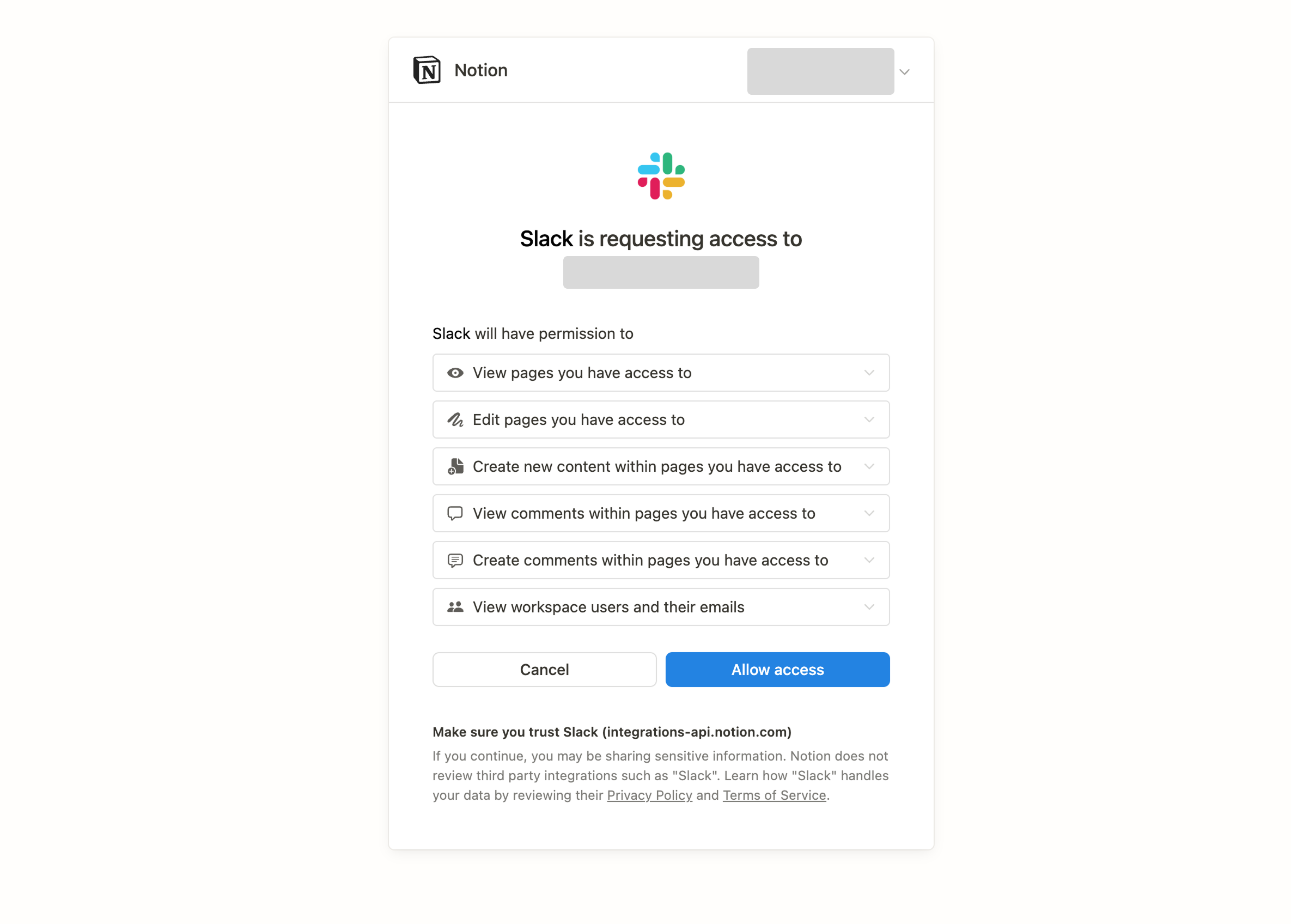

Notion connection pages

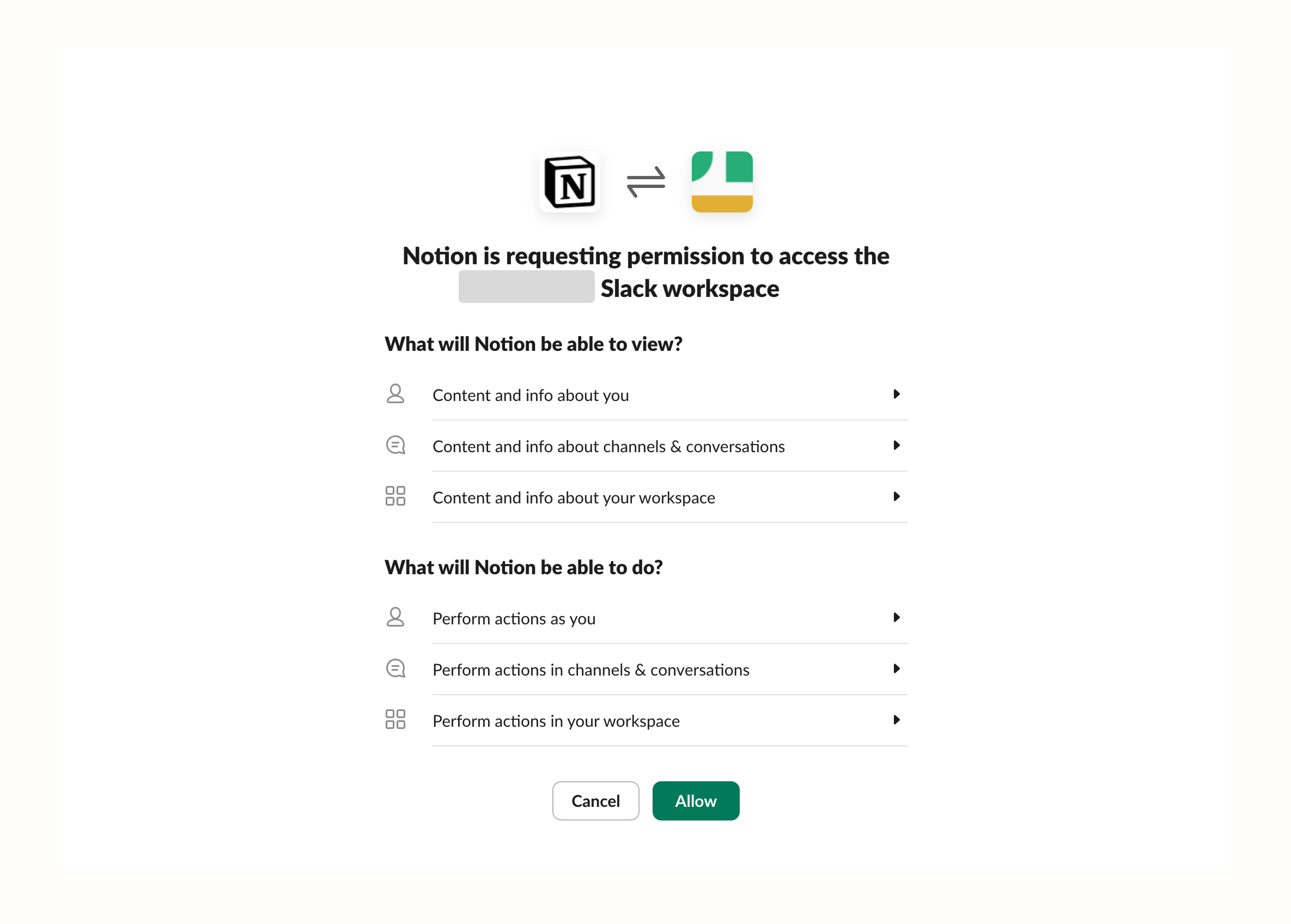

Notion connection pagesHere is another example, just like a Slack’s marketplace integration with the Notion. Slack is requesting access to Notion’s specific workspace, it can read the articles and present them in your Slack channels.

At the same time, Slack also provides a consent page to authorize Notion to access the workspace during this process.

ChatGPT Operator should adopt a similar approach by integrating OAuth, allowing the agent to securely access multiple third-party services. This way, it can obtain access tokens with the necessary permissions to perform tasks safely.

Step-up authentication for sensitive actions

An AI agent can handle routine tasks independently and autonomously, but for high-risk actions, additional verification is required to ensure security, — such as sending funds or modifying security settings — the user must verify their identity through multi-factor authentication (MFA). This can be done via push notifications, one-time passwords (OTPs), or biometric confirmation.

However, frequent step-up authentication can lead to user frustration, especially if triggered too often. So agent-specific experience needs to think about the user experience along the way within this new paradigm.

To enhance security without compromising user experience, adaptive authentication and MFA should be used to determine when additional verification is necessary. Risk-based triggers, such as IP changes or unusual behavior, help minimize unnecessary authentication requests.

Federated identity and single sign-On (SSO) for multi-agent ecosystems

In a multi-agent enterprise ecosystem, AI agents often need to interact across different platforms. To streamline authentication, users authenticate once via an identity provider (IdP) such as Okta, Azure AD, or Google Workspace. The agents then authenticate using SAML, OpenID Connect (OIDC), with access managed through role-based (RBAC) or attribute-based (ABAC) access control.

This approach eliminates the need for users to log in multiple times while enhancing security and compliance through centralized identity management. It also allows for dynamic access policies, ensuring agents operate within defined permissions.

Scope and permission management

Since Operators and Agents can act on behalf of users, it’s important to give humans enough control and carefully define AI agent permissions. Two key principles to follow are:

- Minimal privileges – Only grant the permissions necessary for the task.

- Time-sensitive access – Limit access duration to reduce security risks.

Role-Based Access Control (RBAC) helps manage an agent’s scope by assigning specific roles for certain tasks. For more granular control, Attribute-Based Access Control (ABAC) allows dynamic, context-aware permission management, ensuring AI agents only access what they need when they need it.

Connect MCP servers with Auth

MCP has become increasingly popular for enhancing AI agents by providing more contextual information, improving their overall performance and user experience.

Why is the MCP server related to authentication, and why does it matter?

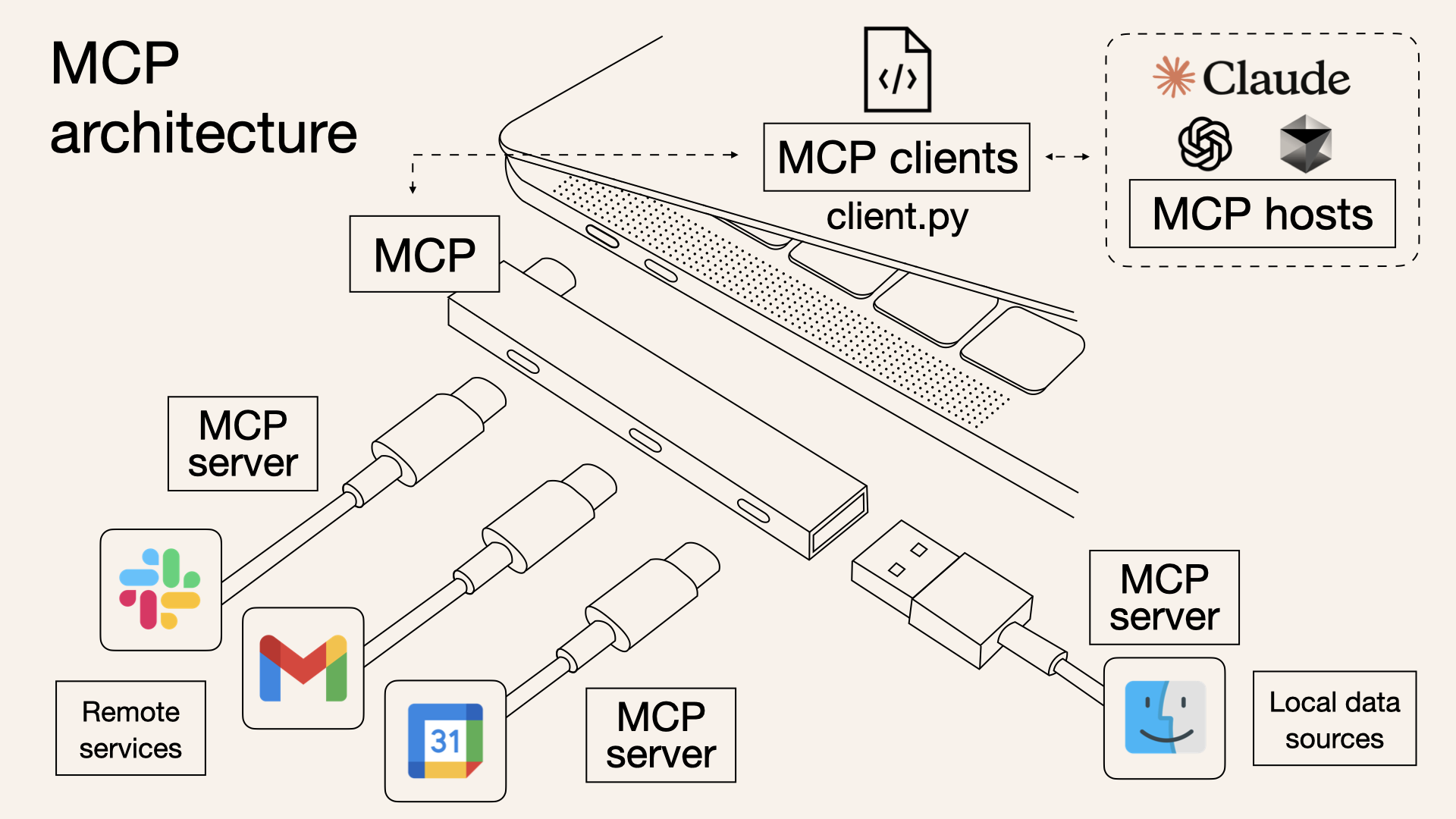

We previously wrote an article to help you understand what an MCP server is.

An MCP server is a key part of the Model Context Protocol, acting as a bridge between AI models and external data sources. It enables real-time queries and data retrieval from services like Slack, Gmail, and Google Calendar. By building an MCP server, you can connect these remote services to LLMs, enhancing your AI-powered applications with better context and smarter task execution.

Unlike Retrieval-Augmented Generation (RAG) systems, which require generating embeddings and storing documents in vector databases, an MCP server accesses data directly without prior indexing. This means the information is not only more precise and up-to-date but also integrates with lower computational overhead and without compromising security.

Reference: https://norahsakal.com/blog/mcp-vs-api-model-context-protocol-explained

Reference: https://norahsakal.com/blog/mcp-vs-api-model-context-protocol-explained

For AI agents using an MCP server, multiple interactions occur between the MCP server, LLM, agent, and user.

In today’s AI-driven world, where agents manage multiple tasks across different services, integrating them with multiple MCP servers is becoming high demanding.

Agent authentication is emerging — your product should adapt

A great example is Composio.dev, a developer-focused integration platform that simplifies how AI agents and LLMs connect with external apps and services. Dubbed “Auth for AI Agents to Act on Users’ Behalf,” it essentially provides a collection of MCP servers (connectors) that can be easily integrated into AI-powered products.

As someone in the auth space, but in reality, it’s just a small part of the broader CIAM (Customer Identity and Access Management) field. What they’ve built is actually a collection of MCP servers (connectors) — useful, but only a fraction of what full CIAM solutions encompass.

Building on the earlier examples, if we consider Google Drive (remote services) as an MCP server instead of Airbnb, it becomes more than just a third-party integration—it serves as an external data source. This enables the agent to access contextual information, interact with the LLM, and potentially gain permissions to create, read, update, and delete (CRUD) files.

However, the core authentication and authorization requirements remain the same.

Use Logto to handle authentication for your agent products

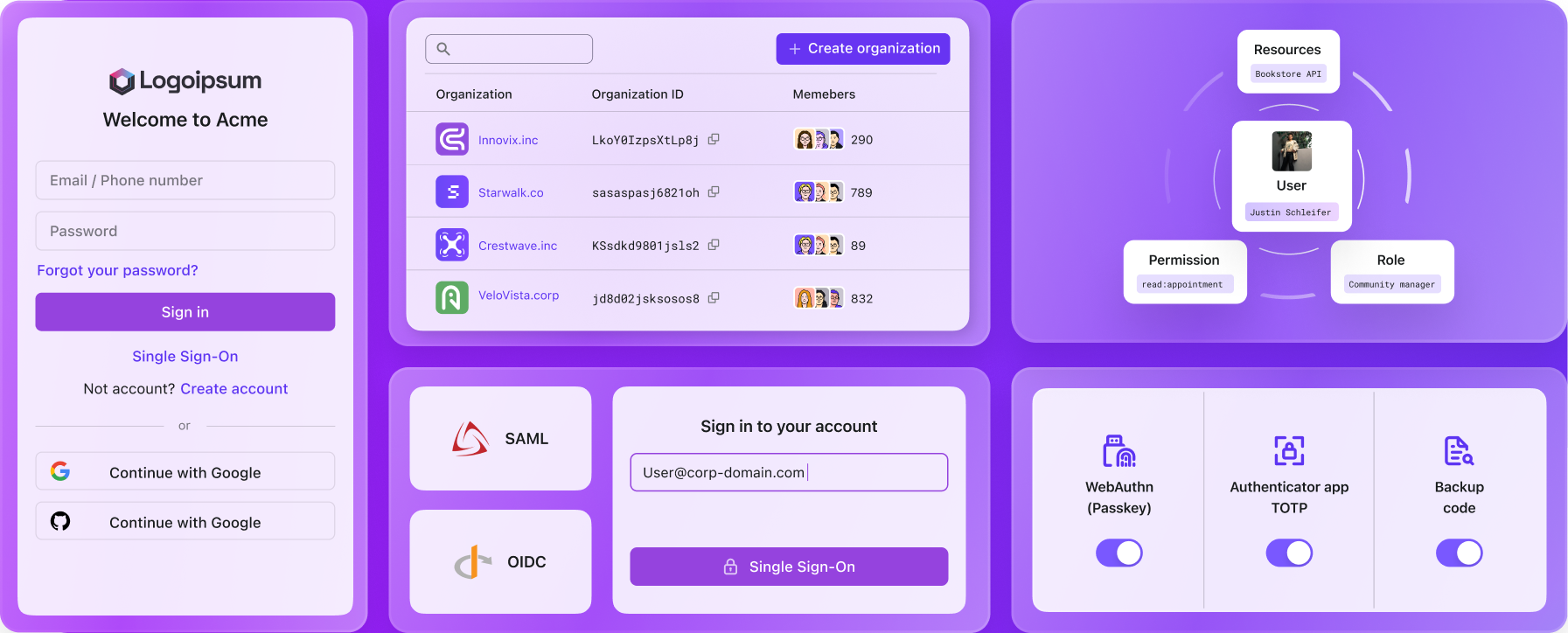

Logto is a versatile CIAM solution that supports SaaS and AI agent products, making authentication and authorization seamless. Here’s why:

- Managed authentication for AI agent products – Logto supports OAuth 2.0, SAML, API keys, Personal Access Tokens, and JWT, allowing easy integration with multiple MCP servers. You can even build your own MCP server and connect it to Logto, thanks to its open-standard foundation.

- Identity provider (IdP) capabilities – Once your product has established users, Logto can act as an IdP, transforming your service into an MCP server and integrating it into the AI ecosystem.

- Advanced authorization

- Role-based access control (RBAC) for managing user roles

- Custom JWT-based ABAC for fine-grained, dynamic access control

- Enhanced security – Features like multi-factor authentication (MFA) and step-up authentication help secure critical actions and improve agent safety.

Have questions? Reach out to our team to learn how Logto can enhance your AI agent experience and meet your security needs.