What is MCP (Model Context Protocol) and how it works

An easy-to-understand guide to Model Context Protocol (MCP), explaining how it helps LLMs access external resources to overcome knowledge limitations and build more powerful AI applications.

What is MCP?

MCP (Model Context Protocol) is an open, universal protocol that standardizes how applications provide context information to large language models (LLMs).

Simply put, just as HTTP protocol allows different websites and browsers to exchange information according to the same rules, MCP is like the HTTP protocol of the AI world. MCP enables different AI models to connect to various data sources and tools in a standardized way. This standardization makes it easier for developers to build AI applications without having to create specialized interfaces for each model or data source.

Why do we need MCP?

Large language models (LLMs) are powerful, but they face several key limitations:

-

Knowledge limitations and update challenges: LLMs only know information included in their training data. For example, GPT-4's knowledge cuts off in April 2023. Training large language models requires enormous computing resources and time, often taking six months or longer to complete a new version. This creates a difficult problem: model knowledge is always "outdated," while updates are extremely expensive and time-consuming. By the time a new model finishes training, its knowledge has already started to fall behind.

-

Lack of specialized domain knowledge: LLMs are trained using publicly available general data. They cannot deeply understand specialized data and information in specific business scenarios. For example, a medical institution's internal processes, a company's product catalog, or an organization's proprietary knowledge are not within the model's training scope.

-

No unified standard for accessing external data: Currently, there are many methods for providing additional information to LLMs, such as RAG (Retrieval-Augmented Generation), local knowledge bases, internet searches, etc. Different development teams offer different integration solutions, leading to high integration costs between systems. Systems with specialized domain data (like CRM, ERP, medical record systems, etc.) are difficult to integrate seamlessly with LLMs. Each integration requires custom development, lacking a common, standardized method.

This is why we need MCP. MCP provides a standardized protocol that allows LLMs to access external information and tools in a consistent way, solving all the above problems. Through MCP, we can gain the following key advantages:

-

Rich pre-built integrations: MCP offers many ready-made server integrations, including file systems, databases (PostgreSQL, SQLite), development tools (Git, GitHub, GitLab), network tools (Brave Search, Fetch), productivity tools (Slack, Google Maps), and more. This means you don't need to build these integrations from scratch. You can simply use these pre-built connectors to let LLMs access data in these systems.

-

Flexible switching between LLM providers: Today you might use GPT-4, tomorrow you might want to try Claude or Gemini, or use different LLMs for different scenarios. With MCP, you don't need to rewrite your entire application's integration logic. You just need to change the underlying model, and all data and tool integrations remain unchanged.

-

Building complex AI workflows: Imagine a legal document analysis system that needs to query multiple databases, use specific tools for document comparison, and generate reports. MCP allows you to build such complex agents and workflows on top of LLMs.

How MCP works

In MCP, there are three core roles: MCP Server (providing tools and data access), MCP Client (embedded in the LLM and communicating with the MCP Server), and MCP Hosts (applications that integrate LLMs and Clients, such as Claude Desktop, Cursor, etc.). Let's look at these three roles in detail to see how they work together.

MCP Server

MCP Server is a program that provides tools and data access capabilities for LLMs to use. Unlike traditional remote API servers, MCP Server can run as a local application on the user's device or be deployed to a remote server.

Each MCP Server provides a set of specific tools responsible for retrieving information from local data or remote services. When an LLM determines it needs to use a certain tool while processing a task, it will use the tools provided by the MCP Server to get the necessary data and return it to the LLM.

MCP Client

MCP Client is the bridge connecting LLMs and MCP Servers. Embedded in the LLM, it is responsible for:

- Receiving requests from the LLM

- Forwarding requests to the appropriate MCP Server

- Returning results from the MCP Server back to the LLM

You can check Develop an MCP Client to learn more about integrating MCP Client with LLMs.

MCP Hosts

Programs like Claude Desktop, IDEs (Cursor, etc.), or AI tools that want to access data through MCP. These applications provide users with interfaces to interact with LLMs, while integrating MCP Client to connect to MCP Servers to extend LLM capabilities using the tools provided by MCP Servers.

MCP Workflow

The three roles above ultimately form an AI application built on MCP.

An example workflow of this system is as follows:

How to build an MCP Server

The MCP Server is the most critical link in the MCP system. It determines which tools the LLM can use to access which data, directly affecting the functional boundaries and capabilities of the AI application.

To start building your own MCP Server, it's recommended to first read the official MCP quickstart guide, which details the complete process from environment setup to MCP Server implementation and usage. We'll only explain the core implementation details.

Defining tools provided by MCP Server

The core functionality of an MCP Server is defining tools through the server.tool() method. These tools are functions that the LLM can call to obtain external data or perform specific operations. Let's look at a simplified example using Node.js:

In this example, we define a tool called search-documents that accepts a query string and maximum number of results as parameters. The tool's implementation would connect to a knowledge base system and return query results.

The LLM will decide whether to use this tool based on the tool's definition and the user's question. If needed, the LLM will call this tool, get the results, and generate an answer combining the results with the user's question.

Best practices for defining tools

When building such tools, you can follow these best practices:

- Clear descriptions: Provide detailed, accurate descriptions for each tool, clearly stating its functionality, applicable scenarios, and limitations. This not only helps the LLM choose the right tool but also makes it easier for developers to understand and maintain the code.

- Parameter validation: Use Zod or similar libraries to strictly validate input parameters, ensuring correct types, reasonable value ranges, and rejecting non-compliant inputs. This prevents errors from propagating to backend systems and improves overall stability.

- Error handling: Implement comprehensive error handling strategies, catch possible exceptions, and return user-friendly error messages. This improves user experience and allows the LLM to provide meaningful responses based on error conditions, rather than simply failing.

- Data access control: Ensure backend resource APIs have robust authentication and authorization mechanisms, and carefully design permission scopes to limit the MCP Server to only accessing and returning data the user is authorized for. This prevents sensitive information leaks and ensures data security.

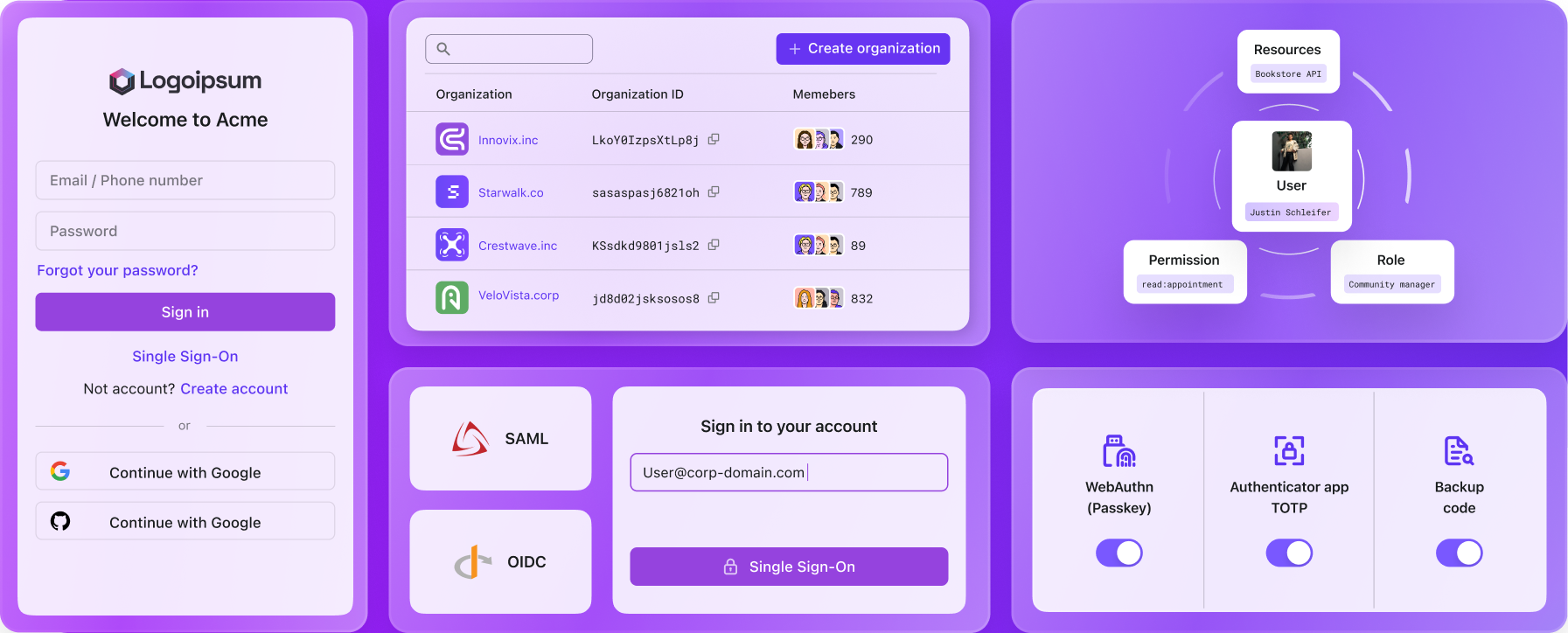

How to ensure the security of data exchange between MCP (AI models) and external systems

When implementing MCP Servers that connect AI models with external systems, there are two key security challenges in MCP implementation:

- Authentication: Unlike traditional applications, in MCP environment, user cannot sign-in with traditional login flows (like username/password, email/verification code, etc.) to access the external system.

- Access control for MCP Server requests: Users accessing systems through AI tools are the same individuals who might directly use your system. The MCP Server acts as their representative when they interact through AI tools. Redesigning an entire access control mechanism just to accommodate the MCP Server would require significant effort and cost.

The key solution to these challenges is implementing Personal Access Tokens (PATs). PATs provide a secure way for users to grant access without sharing their credentials or requiring interactive sign-in.

Here's how the workflow functions:

This approach allows your existing service to maintain its authentication mechanisms while securely enabling MCP integration.

You can refer to the blog post: Empower your business: Connect AI tools to your existing service with access control with a complete source code example to learn how to use Logto's Personal Access Tokens (PAT) combined with Role-Based Access Control (RBAC) to restrict the resources the MCP can access from backend services.

Summary

MCP (Model Context Protocol) brings revolutionary changes to the combination of LLMs and specific businesses. It solves the problems of knowledge limitations in large language models, lack of specialized domain knowledge, and non-unified standards for external data access.

Using MCP to connect your own services will bring new possibilities to your business. MCP is the bridge connecting AI with business value. You can create AI assistants that truly understand your company's internal knowledge, develop intelligent tools that access the latest data, and build professional applications that meet industry-specific needs.